Docker is a powerful tool increasingly used by developers and operations, especially in companies that have adopted a DevOps approach.

This tool allows applications to run on any environment in the same way since it is agnostic to the system.

Docker technology encourages collaborative work between developers and administrators and provides an environment that reduces silos between different teams.

The significance of Docker does not only come from the technology itself but also from its rich ecosystem, which is becoming more and more accessible to businesses.

Orchestration is one of the technologies that revolves around Docker. Google created Kubernetes, Amazon created ECS, Mesos launched Marathon, and Docker Inc. initiated Swarm.

The advantage of Docker Swarm is that it is already integrated into Docker by default.

Although efforts are made by Google, Redhat, Docker Inc., the Open Container Foundation, and other organizations to separate Docker from Docker Inc. (the company), Docker Swarm remains the most accessible orchestration technology.

Subsequently, we will explore how to create a Docker image, container, and service, and then how to use Swarm for deployment.

1. Installation and Prerequisites

A Docker container runs the same way on Ubuntu, Fedora, or any other OS; however, the installation method differs.

In the following, we will use Ubuntu 16.04 as the operating system.

For infrastructure, we will use AWS, but you can use any other provider as long as the cluster machines can communicate with each other and as long as the port constraints we will see later are respected.

You also have the option to create your cluster with Docker Machine. Docker Machine is a tool developed by the Docker community that provisions virtual machines from various cloud vendors (AWS, GCE, DO) and even local machines with VirtualBox.

For the installations to be done, we first need to install Docker. If you have an older version of Docker installed, start by uninstalling it:

…

sudo apt-get remove docker docker-engine docker.io

…

Then, install the following packages:

…

sudo apt-get update

sudo apt-get install apt-transport-https ca-certificates curl software-properties-common

…

Import the GPG keys:

…

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add –

sudo apt-key fingerprint 0EBFCD88

…

Add the repositories. This depends on your machine's architecture:

- For amd64 :

…

sudo add-apt-repository \

« deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable »

…

- For armhf :

…

sudo add-apt-repository \

« deb [arch=armhf] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable »

…

- For s390x :

…

sudo add-apt-repository \

« deb [arch=s390x] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable »

…

Finally, install the latest version of Docker:

…

sudo apt-get update

sudo apt-get install docker-ce

…

2. Docker Swarm Architecture

Before starting to create or manipulate clusters, understanding how Docker Swarm works and its architecture is essential for the following steps.

Since version 1.12, a significant addition to Docker has been made: Swarm mode.

This mode introduced many features that solved some difficulties.

Docker Swarm serves the standard Docker API, so any tool that already communicates with a Docker daemon can use Docker Swarm to seamlessly adapt to scalability: Dokku, Docker Compose, Krane, Flynn, Deis, DockerUI, Shipyard, Drone, Jenkins, etc.

In addition to managing networks, volumes, and plugins with standard Docker commands, Swarm's scheduler includes useful filters such as node tags and strategies like binpacking.

Swarm is production-ready and, according to Docker Inc., has been tested to scale up to 1,000 nodes and fifty thousand 50,000 containers without performance degradation.

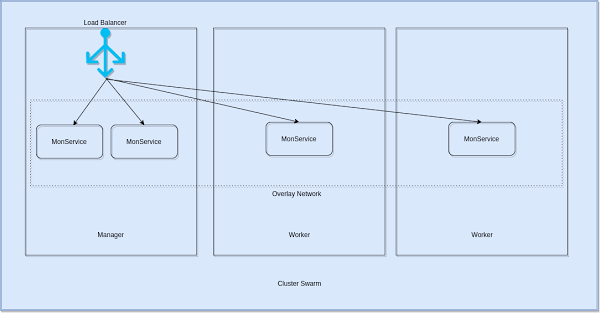

A Swarm cluster consists of managers and workers.

Managers are the leaders of the cluster and are responsible for executing orchestration algorithms.

One of the best practices to remember is that the number of managers should never be an even number but rather odd: 1, 3, 5, etc. This ensures proper execution of the Raft algorithm, which is essential for cluster availability and uses voting.

Raft provides a generic way to distribute a state machine across a cluster of computing systems, ensuring that each node in the cluster accepts the same series of state transitions.

It has a number of open-source reference implementations, with complete specification implementations in Go, C++, Java, and Scala.

Raft achieves consensus through an elected leader. A server in a Raft cluster is either:

- a leader

- a candidate

-

or a follower Swarm using heartbeats to inform cluster nodes of a leader's presence; if after a certain time the leader does not respond, an election process is organized, and nodes will vote for the first candidate that sent a heartbeat.

Practically, if we take the example of an application deployed on a cluster formed by 1 manager and 2 workers, we create and launch the service from the manager, configure, reconfigure, and scale it from the same machine.

In the example where we scale our application (called MyService) to 4 instances, the orchestrator takes care of distributing them evenly across the entire cluster.

The manager is the one who should receive any request querying our application from the outside. It is the internal load balancing algorithm in Swarm mode that allows for the discovery of dynamically distributed instances across the entire cluster and then load balancing on those instances.

This load balancing is based on a special overlay network called "ingress." When a Swarm node receives a request on a public port, this request goes through a module called IPVS. IPVS keeps track of all IP addresses participating in this service, selects one of them, and sends the request on the ingress network.

Our service (MyService) will have, by default, a "user-defined network" (an overlay network), and if another service needs to communicate with ours, it must be attached to this overlay network.

3. Creating a Swarm Cluster

For the following, we will use 3 machines, 1 manager, and 2 workers.

The machines must be able to communicate with each other. Ports 2377 (TCP), 7946 (TCP and UDP), and 4789 (TCP and UDP) must be accessible. Docker must be installed on all 3 nodes.

The first step is to initialize the manager:

…

docker swarm init –advertise-addr <node_ip|interface>[:port]

…

Practically, if our eth0 interface has an IP address of 138.197.35.0, we would initialize the cluster as follows:

…

docker swarm init –force-new-cluster –advertise-addr 138.197.35.0

….

You should adapt the IP address to what you have on your manager.

The last command will display a command that allows a worker to join the cluster:

…

docker swarm join \

–token SWMTKN-1-5b54vz0sie1li0ijr0epkhyjvmbbh2pg746skh8ba5674g1p6x-cmgrvib1disaeq08x8a5ln7zo \

138.197.35.0:2377

…

This command should be executed from the 2 workers; you can then see the 2 workers from your manager machine by typing:

…

docker node ls

…

4. Creating and Deploying a Service on a Swarm Cluster

After creating the cluster, we can proceed to create a service and then deploy it.

Our service will be based on a simple Dockerfile:

…

FROM alpine

ENTRYPOINT tail -f /dev/null

…

This file creates an infinite loop that will run once the container is launched. This is why I named it "infinite."

On doit builder ce Dockerfile :

…

docker build -t eon01/infinite .

…

Then, we can create the service corresponding to this build:

…

docker service create –name infinite_service eon01/infinite

…

To check if the service has been created, we can use the command:

…

docker ps

…

5. Scaling a Service on Swarm

We have a service that has been launched from our manager, but by default, only one instance will be deployed. One of the strengths of Swarm mode is not just scalability but also the simplicity of performing this operation. If the service is functional, all we have to do is execute:

…

docker service scale infinite_service=2

…

This command will create another instance in addition to the one that was already created at the beginning.

To scale up to 50 instances of the same service, we use the same command:

…

docker service scale infinite_service=50

…

Conclusion

The Docker ecosystem consists of a set of rich tools, but orchestrators have the greatest weight in the container world.

Docker Swarm is an orchestrator that comes by default with Docker. Its usage is not complicated, it surely does not have the same characteristics as Kubernetes or another orchestrator, but it has its strengths.

The evolution of Docker and Docker Swarm is dynamic and fast-paced, which is a strong point for this product, even though some opinions consider that the successive changes made to this tool should be more carefully studied and better standardized.