AI is no longer exclusively reserved for digital-native companies! It integrates into the products and processes of virtually every sector, but its large-scale implementation remains an unresolved and frustrating issue for most organizations. Companies can ensure the success of their AI efforts by scaling teams, processes, and tools in an integrated and coherent manner. This is part of an emerging discipline called MLOps, or Machine Learning Operations. It seems natural to think that the IT department has a role to play and should be at the heart of the strategy and evolution of this MLOps scaling.

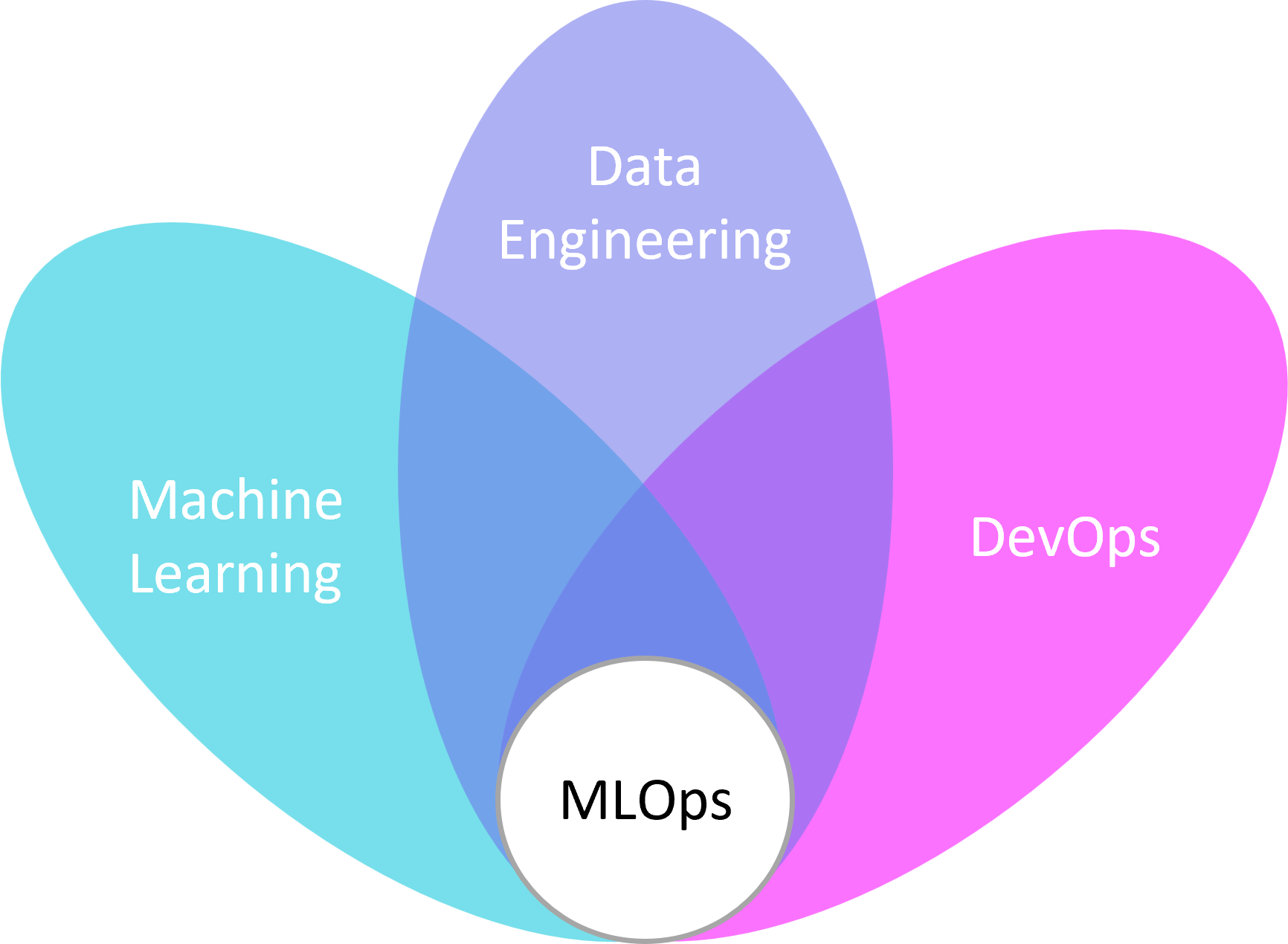

Faced with the complexity of transitioning from experimentation to operationalizing artificial intelligence, the observation reveals that the majority of companies struggle to scale. Indeed, the value of its use cases is often difficult to quantify, development times are long, and model deployment is complex because it involves different professions that do not speak the same language: different professions with different skills and tools, leading to the conclusion that the problem is not only related to AI but also to organizational structure. IT departments and organizations are increasingly confronted with the omnipresence of machine learning and the complexity of the lifecycle of its projects. MLOps addresses these issues by being a discipline that aims to bring together a set of practices, know-how, and ultimately culture.

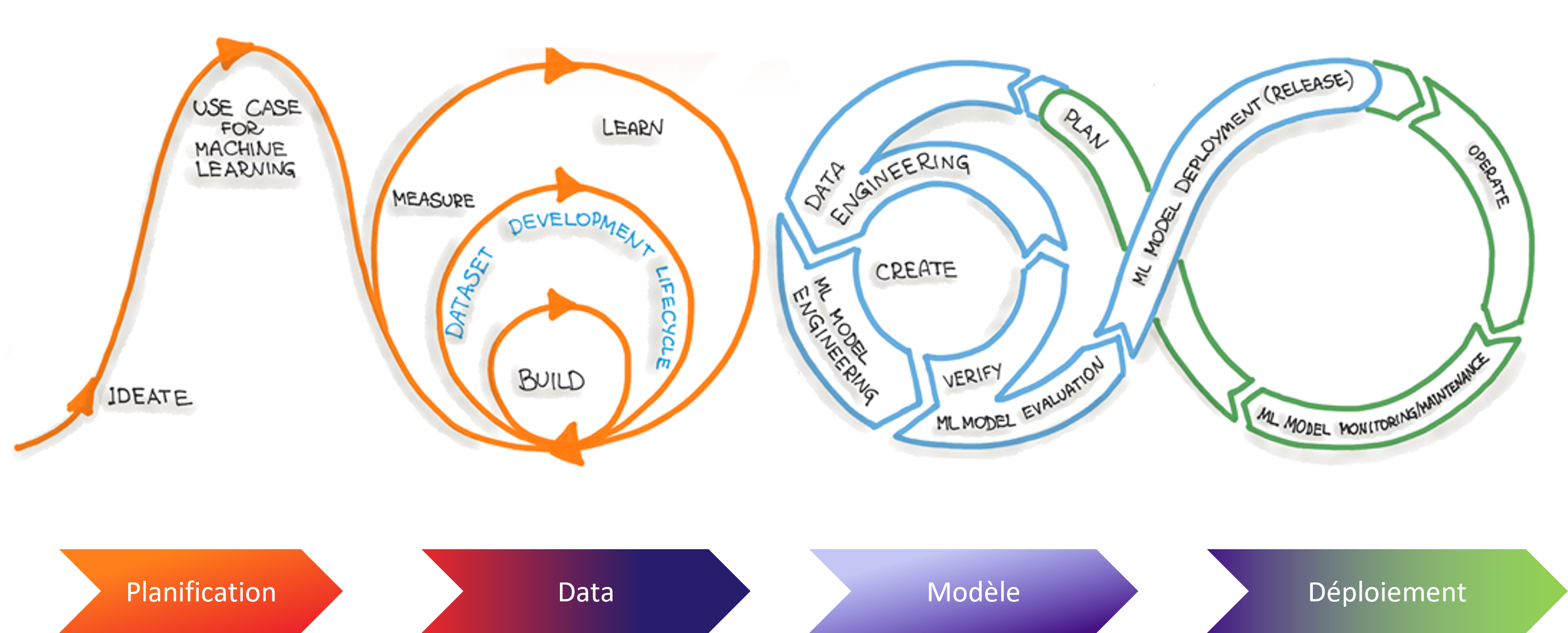

Building AI-powered models is a creative process that requires constant iterations and refinements.

An AI is more useful and mastered when it is operationalized throughout the value chain. As long as models are not put into production, the value of an artificial intelligence project can never be fully demonstrated.

The various points of convergence

Is MLOps so indispensable today?

Faced with the complexity of implementing AI use cases, the challenges that organizations must overcome to evolve and adapt are still very relevant. The adoption cursor should be placed according to their size and means, but with scaling, the problems also "scale up." Before discussing and integrating MLOps into their organization, it is necessary to have first thought about and ensured the implementation of a data strategy that must be at the heart of the organization (data quality, governance, etc.). Among the IT directors surveyed, there was a real consensus on the usefulness of MLOps today but only when there is a data culture in the organization.

People and organizational structure, the crux of the matter?

The development of AI was previously the responsibility of an AI team, but building machine learning at scale cannot be produced by a single team. It requires a variety of unique skills, and very few individuals possess them all. To successfully evolve AI, organizations must build and empower teams that can focus on strategic priorities of great value that only their teams can accomplish. Let data scientists do data science, let engineers do engineering, let IT focus on integration and infrastructure.

Why normalize processes?

The first step to evolving AI is normalization: a way to create models in a reproducible manner and a well-defined process for operationalizing them. Custom processes are (by nature) full of inefficiencies. Yet, many organizations fall into the trap of reinventing the wheel every time they operationalize a model. To standardize, organizations must collaboratively define a "recommended" process for the development and operationalization of AI, and provide tools to support the adoption of this process. Data, model, and feature stores can support this standardization.

In 2022, is it so easy to choose an MLOps platform?

Given that trying to standardize AI and ML production is a relatively new project, the ecosystem of data science and machine learning tools remains very fragmented, a 1000-piece puzzle! To build a single model, a data scientist works with about a dozen specialized tools. On the other hand, IT or governance uses a completely different set of tools, and these distinct toolchains do not easily communicate with each other. Consequently, it is easy to collaborate punctually, but it is difficult to create a robust and reproducible workflow. A scattered collection of tools can lead to long time-to-market and building AI products without adequate monitoring, hence the importance of end-to-end platforms.

Among the existing tools in the MLOps universe, we find:

- Cloud: pioneering tools addressing similar issues and becoming democratized within organizations.

- Amazon Sagemaker: an ML platform that helps you build, train, manage, and deploy machine learning models in a production-ready ML environment.

- Azure Machine Learning: similar to SageMaker, it supports supervised and unsupervised learning.

- Google Cloud Vertex AI: a fully managed end-to-end platform for machine learning and data science. It has features that help manage services seamlessly. Their ML workflow makes things easier for developers, data scientists, and engineers.

Other notable tools:

- MLflow: an open-source platform for controlling machine learning pipeline workflows. It supports experimentation, reproducibility, and deployment.

- Domino Data Lab: highly favored by teams focusing on data management, notably because it focuses on creating centralized storage and visualization spaces for MLOps data.

- Metaflow (Netflix): helps design a workflow, execute it at scale, and deploy it in production.

- HPE Ezmeral, Iguazio, Paperspace, etc.

Points to consider for the success of an MLOps strategy

- Interoperability: Ensure that new tools will work with the existing IT ecosystem or can be easily extended. For organizations transitioning from on-premises infrastructure to the cloud, look for tools that will work in a hybrid environment, as migrating to the cloud often takes several years.

- User-friendliness between IT and AI teams: these tend to have opposing needs. The envisaged platform must offer flexibility to data scientists to use libraries of their choice and work independently without requiring constant technical assistance. On the other hand, IT needs a platform that imposes constraints and ensures that production deployments follow predefined and approved paths. A well-thought-out MLOps platform can do both.

- Collaboration: an MLOps tool must allow data scientists to work easily with data engineers and vice versa, and to work with governance and compliance. Knowledge sharing and ensuring business continuity in the face of employee turnover are crucial.

- Governance: With AI, governance becomes much more critical than in other applications. It is responsible for ensuring that an application aligns with an organization's ethical code, that the application is not biased in favor of a protected group, and that decisions made by the AI application are reliable. Depending on the situations (existing, culture and maturity, budgets, organization size, etc.), several options exist. Through its support, Aneo is precisely there to take into account these different situations and to help make the best choice.

Are we facing a shortage of (good) profiles?

Putting one or two AI models into production is very different from managing an entire AI product, and finding profiles capable of taking a step back on this point is a real strength. Despite this, finding AI profiles is no longer a problem, just look at the number of master's programs that are flourishing in the academic world, but finding versatile profiles proves to be more complicated...

Trends expressed by present IT directors

- The talent shortage remains endemic.

- To take advantage of the tools, consolidation around end-to-end platforms must be increased.

- Cultural adoption of ML thinking by IT is strategic for operationalizing AI.

Leaders are still looking for ways to stay ahead and create more value through artificial intelligence. Nevertheless, companies with the best models or the smartest data scientists are not necessarily the ones that will prevail: success will go to companies that can implement and evolve intelligently to unleash the full potential of AI.